Improving surgeons’ performance with AI

Robin Vergouwen · · 7 min readI recently read an article about the concept of coaching in surgery that was published by Atul Gawande in 2011. In this article, Gawande, who is surgeon at Brigham and Women’s Hospital in Boston, begs the question that if athletes and singers have coaches for performance improvement, why shouldn’t surgeons have coaches too? He noticed that the quality of his surgical skills stagnated after some years of practicing medicine. These improved tremendously when he consulted an old colleague to coach him.

Nowadays, many athletes not only have a coach, but they also use video analytics to continuously improve their performance. So again it could be asked, why should we not apply this to the medical field as well? This is exactly what we are developing at Incision: with the use of Artificial Intelligence, we are able to perform detailed video analysis on laparoscopic procedures. With the information that we extract from these analyses, we can provide residents (surgeons in training) with standardized feedback, consisting of clearly defined measures and unbiased assessments. Moreover, a resident is able to compare his or her results with a benchmark in order to gain insight in the quality of his or her performance.

In this article, I will explain what performance analytics we conduct and how these contribute to improving the quality of surgical skills. Our analytics are focused on Laparoscopic procedures, and in this blog I specifically take Laparoscopic Cholecystectomy (the removal of the gallbladder) as example.

Computer Vision: the Core of Our Analytics

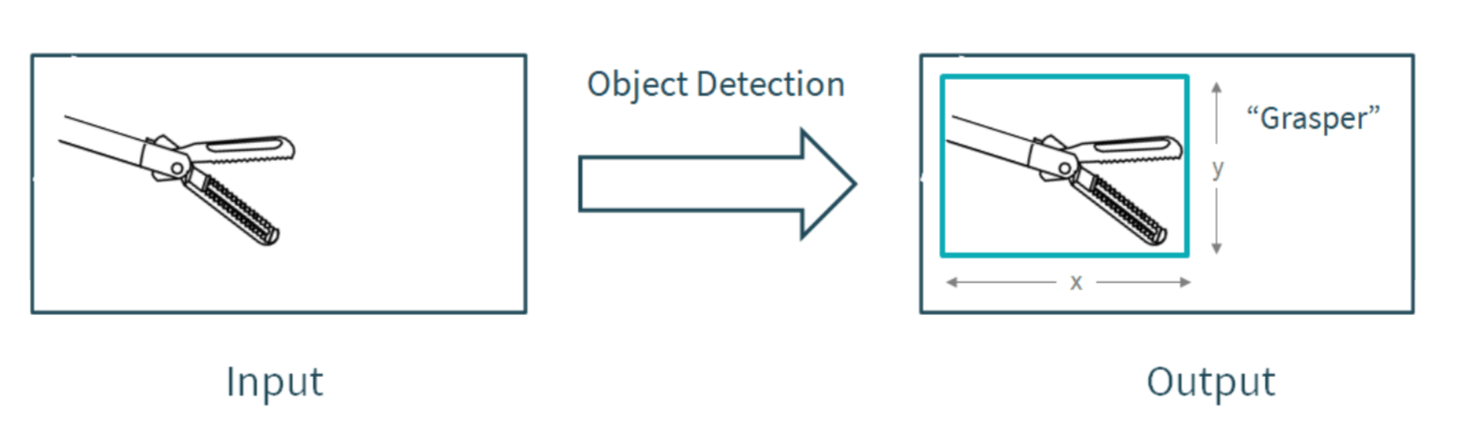

At the core of our video analytics lies a technology called Computer Vision. With this technique, we teach an algorithm to understand what is happening in an image or a video. Specifically, we perform object detection on laparoscopic videos,meaning that we are able to detect which surgical instrument (object) is present in an image or a video, and also where it is located.

Convolutional Neural Network

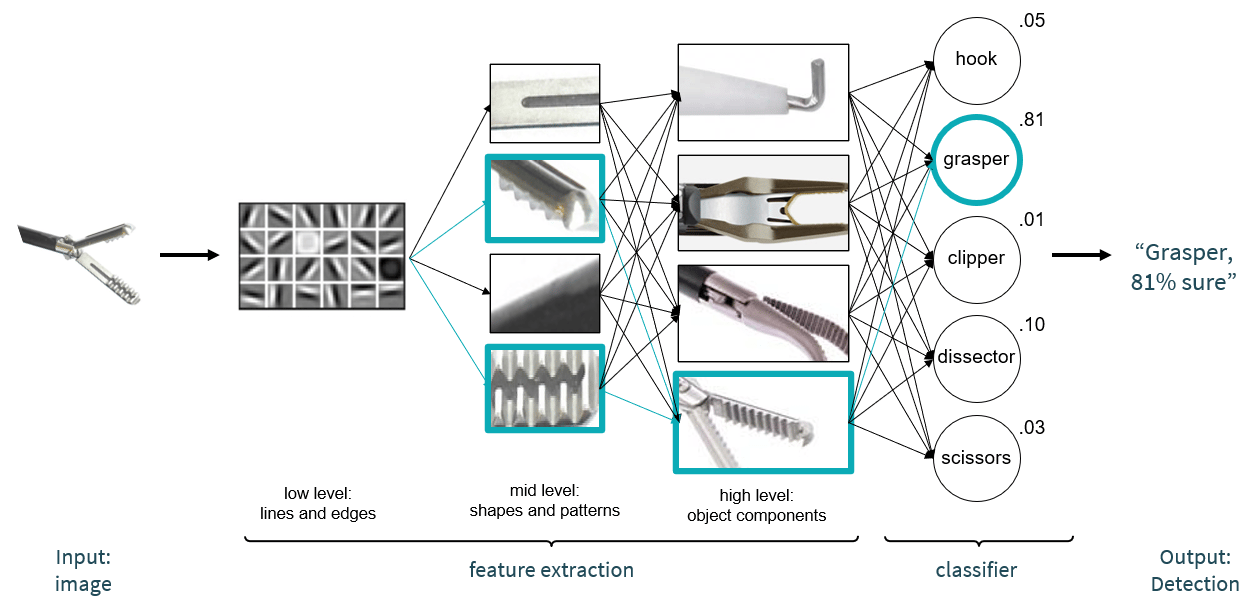

So what is object detection exactly? To explain the underlying workings of this technology, we have to dive into the concept of a Convolutional Neural Network. Neural networks are algorithms that are built from collections of calculations. A Convolutional Neural Network consists of multiple layers that break down an input image into low-, mid-, and high-level features. This structure is inspired by the workings of the visual system of the human brain, so those of you with a background in neuroscience may recognize the following course of events: the first layers of the network break down an image into low level features, meaning that the image is broken down into many combinations of just lines and edges. Certain combinations of lines and edges will activate the next layers of the network, containing mid-level features (shapes and patterns). And specific combinations of shapes and patterns will activate different high-level features: object components. Ultimately, from this pattern of activations throughout the layers of the network, a prediction will be made about which object the input image contains.

Below, a simplified overview of the workings of a Convolutional Neural Network is illustrated. An image is broken down into low-, mid-, and high-level features. You can see that the combination of low level features activate the mid-level features with the ribbed pattern. Then, the combination of activated mid-level features activates the high-level feature that contains the top of grasper. This activated high-level feature is associated with the prediction ‘grasper’, and as a result, the final prediction is a grasper (which is correct!).

From Instrument Detection to Feedback Generation

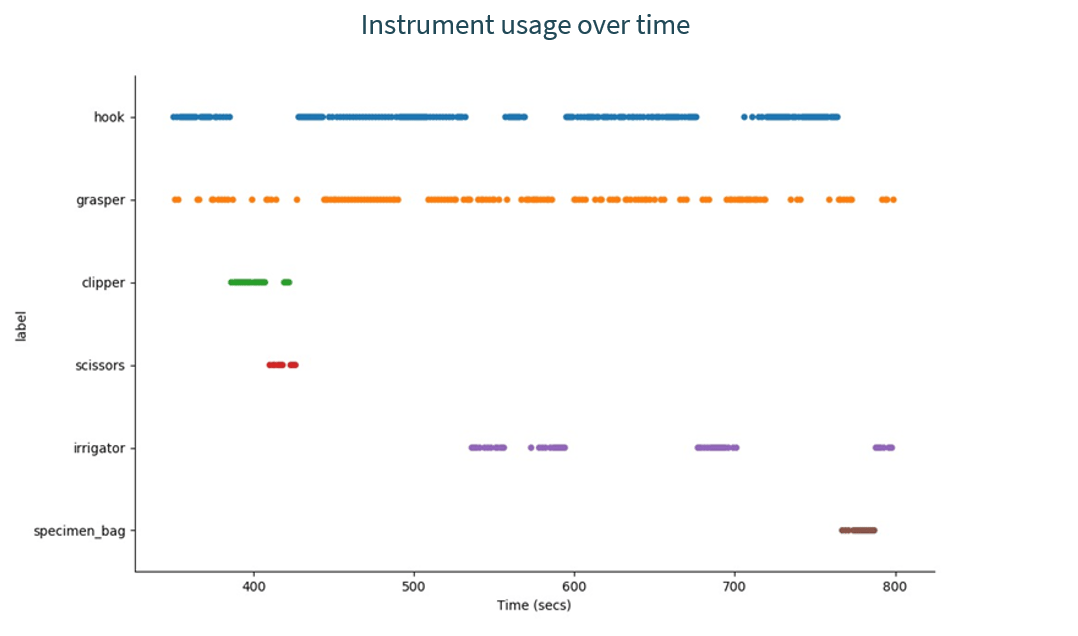

Great, we are able to detect surgical instruments from images! However, we want to create feedback on videos of surgical procedures. As you may know, a video actually consists of many still images (usually about 30 per second). Thus, we can detect which instruments are present at each 1/30th of a second during a procedure. With these detections, we can create one overview of how instruments were used during a procedure, as is shown in the graph below.

Surgical Phase Segmentation

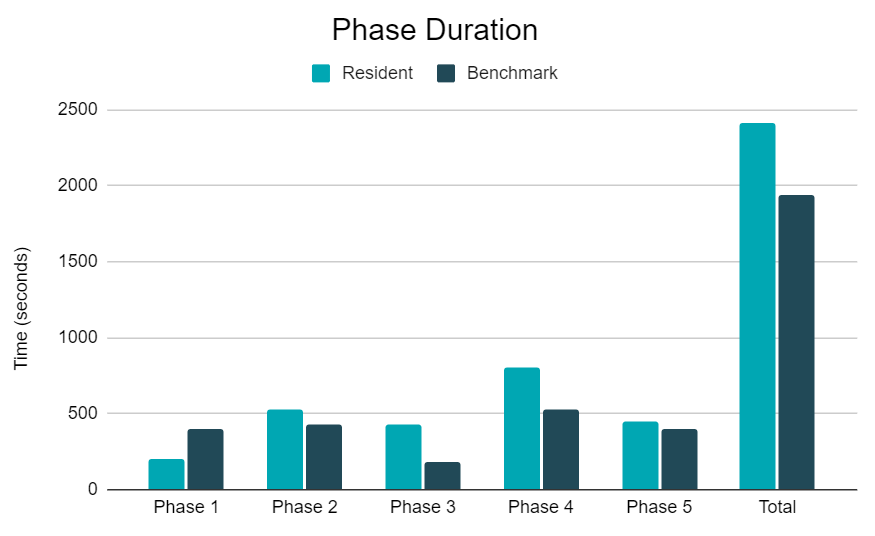

Since the instruments that are used have a high correlation with the surgical phases within a procedure, we can now infer the time points of the phases. For example, the use of clipper and scissors indicate that the surgery is in the third phase ('cystic artery and cystic duct transection'). As a result, we know that the phase starts when the clipper is inserted, and ends when the scissors are not in use anymore. Similarly, other instruments indicate the beginning or ending of the remaining phases, as illustrated below.

Now that we know the time points at which each phase took place, we also know the duration of each phase. These can subsequently be compared to a benchmark.

Efficient Instrument Usage

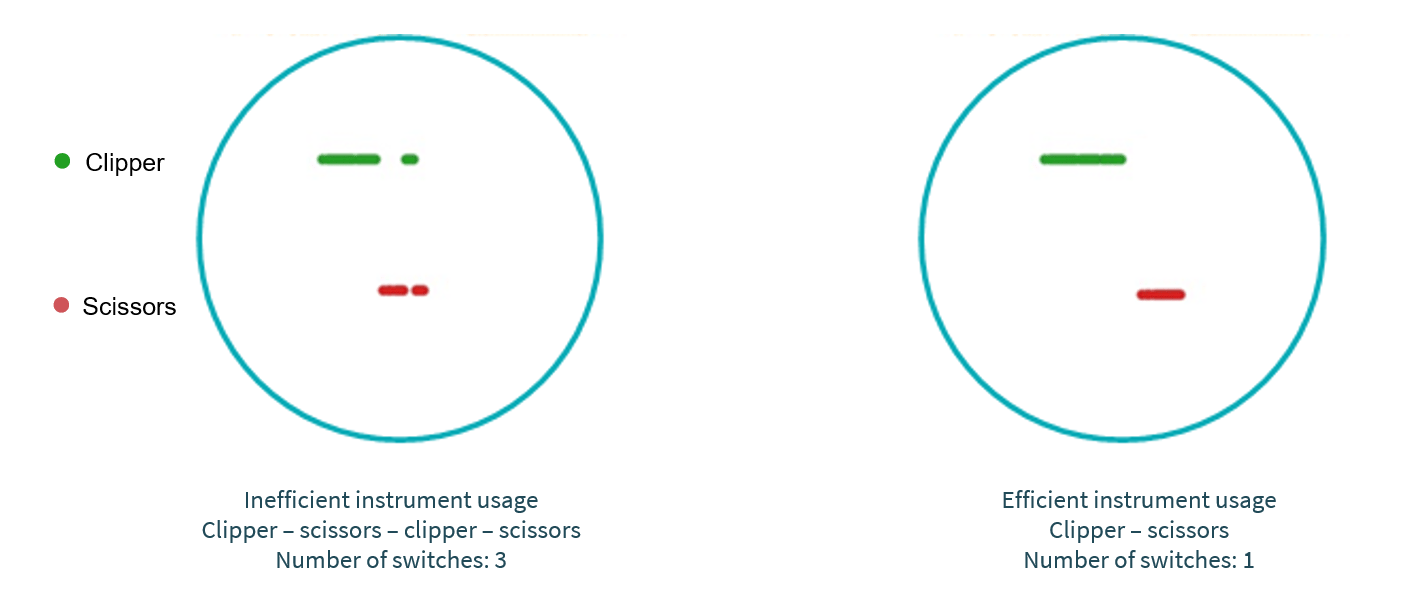

Another component we can look at is the efficiency of the instrument usage. For example, there is a moment in each Laparoscopic Cholecystectomy procedure at which two structures (the cystic artery and the cystic duct) have to be clipped with the clipper and cut with the scissors. These structures lay close to each other, and therefore it is most efficient - in terms of instrument usage - to first clip both structures, and afterwards cut them. However, it is possible that a resident would first clip and cut one of them, and then clip and cut the other. In this case, we can see a pattern over time of switches between the clipper and the scissors, as can be observed in our example graph of the instrument usage over time. This is an example of inefficient instrument usage, as the goal could be to keep the switching of instruments at a minimum.

Conclusion: Ongoing Work

These are only the ‘Basics’ in the development of our feedback report for laparoscopic surgeries. Together with surgeons and residents we are complementing these with more complex measurements, targeted at providing feedback on key skills per procedure. These surgically highly relevant measurements provide residents with guidelines for performance improvement. And by using them we allow residents to drastically steepen their learning curve.

We would also love to hear from you! What kind of feedback or insights would you like to receive on surgical performance? Feel free to reach out if you have any questions regarding our product or if you want to be involved.

About Robin

With an academic background in both Cognitive Neuroscience and Computer Science, Robin is intrigued by the similarities and differences between the human brain and the 'computer brain'. How do we perceive the world around us? How do we see what is present in an image or a video? And how can we make a computer understand what is present in an image or a video? These are some of the questions which she tries to answer on a daily basis by developing AI applications that analyze surgical video material.

This work is an important part of Incision's mission to realize the potential of standardized analysis and feedback generation in the field of surgical training.

Curious what Incision's surgical e-learning platform can do for your hospital?

The Incision Academy contains over 500 high-quality video courses on surgical procedures to prepare for your work. Explore surgical procedures step by step in 2D & 3D, master basic principles and skills, anatomy, medical technology, and much more. We bring together everything that’s required to optimize OR performance.